[CKA] TroubleShooting - 2

Case3-1. Worker Node Failure

1

2

3

4

controlplane ~ ➜ kubectl get node

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 3m16s v1.32.0

node01 NotReady <none> 2m33s v1.32.0

kubelet이란?

Node에서 실행되는 k8s Agent로써 Pod가 정상적으로 동작하도록 관리(pod 실행관리/health 체크)하는 역할 kube-api가 지시하는 역할을 수행하는 Agent

kubelet의 상황을 보기

1

2

3

4

5

6

7

8

9

controlplane ~ ➜ systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2025-05-26 12:14:28 UTC; 4min 54s ago

Docs: https://kubernetes.io/docs/

Main PID: 4670 (kubelet)

...(생략)

Active: active로 보여 kubelet에 영향이 없다고 보일 수도 있지만, 현재 이 명령어를 실행한 Node는 controlplane으로 Master Node이다.

실제 NotReady 상태인 node01의 상태를 보기 위해선 ssh를 통해 확인이 필요하다. **

1

2

3

4

5

6

7

8

9

controlplane ~ ➜ ssh node01 systemctl status kubelet

○ kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead) since Mon 2025-05-26 12:16:13 UTC; 5min ago

Docs: https://kubernetes.io/docs/

Process: 2803 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=0/SUCCESS)

Main PID: 2803 (code=exited, status=0/SUCCESS)

node01의 kubelet이 inactive 상태인 것을 확인하였다.

node01의 kubelet을 동작하게 하기

1

2

3

4

5

6

7

8

9

10

11

12

13

controlplane ~ ➜ ssh node01 systemctl start kubelet

controlplane ~ ➜ ssh node01 systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Mon 2025-05-26 12:22:20 UTC; 3s ago

kubectl get nodes

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 8m27s v1.32.0

node01 Ready <none> 7m44s v1.32.0

node01의 상태가 Ready로 되었다!

CASE 3-2 Woker Node Failure(2)

1

2

3

4

5

6

7

8

9

controlplane ~ ➜ ssh node01 systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Mon 2025-05-26 12:29:11 UTC; 5s ago

Docs: https://kubernetes.io/docs/

Process: 9860 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=1/FAILURE)

Main PID: 9860 (code=exited, status=1/FAILURE)

시스템 파악을 위해 ssh를 사용해 node01의 kubelet의 Log를 확인한다.

1

2

3

4

5

6

7

controlplane /etc/kubernetes ✖ ssh node01 journalctl -u kubelet -f

May 26 12:36:53 node01 kubelet[13412]: Flag --pod-infra-container-image has been deprecated, will be removed in 1.35.

Image garbage collector will get sandbox image information from CRI.

May 26 12:36:53 node01 kubelet[13412]: I0526 12:36:53.171954 13412 server.go:215] "--pod-infra-container-image will not be pruned by the image garbage

collector in kubelet and should also be set in the remote runtime"

May 26 12:36:53 node01 kubelet[13412]: E0526 12:36:53.175395 13412 run.go:72] "command failed" err="failed to construct kubelet dependencies:

unable to load client CA file /etc/kubernetes/pki/WRONG-CA-FILE.crt: open /etc/kubernetes/pki/WRONG-CA-FILE.crt: no such file or directory"

Log 확인 결과 CA의 파일 이름이 잘못된 것을 확인할 수 있었다. worker node가 정상적인 인증서 파일을 참조할 수 있도록 변경

결과적으로 node01이 지속적으로 문제이기에 ssh을 통해 아예 node01 안에서 작업을 한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

controlplane /var/lib/kubelet ➜ ssh node01

node01 /var/lib/kubelet ➜ cat /etc/kubernetes/kubelet.conf

apiVersion: v1

clusters:

(...)

- name: default-auth

user:

client-certificate: /var/lib/kubelet/pki/kubelet-client-current.pem

client-key: /var/lib/kubelet/pki/kubelet-client-current.pem

node01 /var/lib/kubelet ➜ cd /var/lib/kubelet

node01 /var/lib/kubelet ➜ ls

checkpoints config.yaml cpu_manager_state device-plugins kubeadm-flags.env memory_manager_state pki plugins plugins_registry pod-resources pods

node01 /var/lib/kubelet ➜ vi config.yml

node01 /var/lib/kubelet ➜ cat config.yaml

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/WRONG-CA-FILE.crt <---- 이곳이 문제!

ssh을 통해 worker node에 접속하여 kubelet.conf를 확인하였다. 그리고 인증서 관련 부분에서 대강적인 경로를 확인안 후 해당 디렉토리에 접근하여 kubelet의 config.yml을 확인한 결과 잘못된 인증서 파일이 적혀져 있었고 이것을 수정하여 이슈를 해결하였다.

CASE 3-3 Woker Node Failure(3)

ssh를 통해 작동하지 않은 worker node에 들어가 journalctl -u kubectl -f를 통해 log를 살펴본다.

1

2

3

node01 ~ ➜ journalctl -u kubelet -f

May 26 12:53:46 node01 kubelet[20350]: E0526 12:53:46.211436 20350 kubelet_node_status.go:108] "Unable to register node with API server" err="Post \"https://controlplane:6553/api/v1/nodes\": dial tcp 192.168.129.204:6553: connect: connection refused" node="node01"

May 26 12:53:47 node01 kubelet[20350]: W0526 12:53:47.973080 20350 reflector.go:569] k8s.io/client-go/informers/factory.go:160: failed to list *v1.RuntimeClass: Get "https://controlplane:6553/apis/node.k8s.io/v1/runtimeclasses?limit=500&resourceVersion=0": dial tcp 192.168.129.204:6553: connect: connection refused

잘못된 API Server 포트가 보인다. K8s API Server의 기본 포트는 6443이지만 현재 node01의 kubelet은 6553으로 연결을 시도하고 있다.

1

2

3

4

5

6

7

8

node01 ~ ➜ cd /etc/kubernetes

node01 /etc/kubernetes ➜ cat kubelet.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJYWZUNWdIbTZzVFl3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TlRBMU1qWXhNakEzTkRoYUZ3MHpOVEExTWpReE1qRXlORGhhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURLQ0FKd0F4NlZ3SXFIcHQwbU4zWW9uNHRvbkZocFd4L1NRRy9PQi9kSnNMcmhBb3Ezb1M2V0pBeHEKYWs0anRLSkFyUTFucXR3amhxMjN3NzBoWmVkNVR3MjRsOHhpYWZXUEdNTy91UGVwb3dEcGlSTVk0R3k3ZWdhWgpKKzByTmQ3c1Bzb2xvQitYb2JkMWxMQkR0aDluRkNMQ2tETUZNTUx1aHh0RUdTUkprQVZCZDlpZkNWMVp0Mnp2CjBzV015YS91SUVUaEV2MnJ4bE83SStnV3JhTjhDQkZCY1Zad1FRcWc0TzZiOUUyYWl0UlRuaFNrR2w3UGQ3QkQKclhXMVBhbTRTQUVDUXd0Qld2R2oxSk4yeXlkaWN0aTVJTmVicHJpKzh6NjRWUldwbkM3ZklXcXdDeVkwbHp0Mwo4K2tybWlDMnR5cE55NlF4bjdnZEk5L2RXRWRMQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJRcW5jeTBKaGxnN2JIRXh3SnVRdDFqdUpJWDhEQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQWQxZ2xIeEROMAp6VTBzdjRhNHZvV1gzQlZrQjBtNXFud2liaUpuQkU1a09qaHlFS0wrZUhFRW9BS09vTnVuNTNMVWtjOGJBZWlhCmxBcCtmODYyWGNmNEhlZy9nNjFZM0thWUhmVC9qQ3lHVUJYZnpBWXJydHRxSzhlc0xWajRwU3R5ZGlmYjY3ZEoKL1VqenJoRWNta1RBSTVNT0NqVlZxNzRaaEUwMWRuZUg2NG5vSGMwcEZUaDk3Rm5KdVZ1TXRYeTZZUG1SWk9rZQovTFJ0czdUY2d1VU1FeHA1U3c2cWlhSnZiZ21iSWZpa0ZMeElPdS93Wi84Rkd5bncxVythZTdXeWI0RkxBSjZZCnFQQ084SVRMYnBCSFV2aitiYi9GWSsxdksrN2VKSUJMbHFRNDA5TENHWFFvMTdycUE2NExzMDBXN0ZQcFUwK1YKQ3Jick5TWDVVbDFBCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://controlplane:6553 <- port 설정 문제

name: default-cluster

위의 port를 6553 → 6443으로 변경하고 이 사항을 적용시키기 위해 node를 재시작 시킨다!

node01 ~ ✖ systemctl restart kubelet

마지막으로 Node가 정상적으로 Ready 상태를 확인

1

2

3

4

controlplane /var/lib/kubelet ➜ kubectl get nodes

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane 47m v1.32.0

node01 Ready <none> 46m v1.32.0

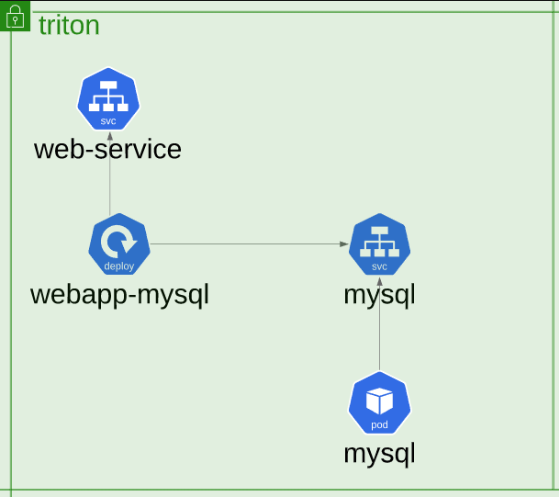

CASE 4-1 Weave Net이 설치되지 않음

2 tier 계층 어플리케이션

pod의 상태를 확인

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

root@controlplane ~ ➜ k get pods -n triton

NAME READY STATUS RESTARTS AGE

mysql 0/1 ContainerCreating 0 92s

webapp-mysql-7bd5857746-x6stl 0/1 ContainerCreating 0 92s

root@controlplane ~ ➜ k describe pod webapp-mysql-7bd5857746-x6stl -n triton

Name: webapp-mysql-7bd5857746-x6stl

Namespace: triton

Priority: 0

Service Account: default

(...)

c09a276fb113f84d85a17fd56b1c24a0f1388fc99aa510c61d3ce77f2d4780f": dial tcp 127.0.0.1:6784: connect: connection refused

Warning FailedCreatePodSandBox 116s (x16 over 2m11s) kubelet (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "ddc9c6f3e5c14290c9f6c0388bda815c6c301a191bdc5f04479b73d6ed500a2c"

: plugin type="weave-net" name="weave" failed (add): unable to allocate IP address: Post "http://127.0.0.1:6784/ip/ddc9c6f3e5c14290c9f6c0388bda815c6c301a191bdc5f04479b73d6ed500a2c": dial tcp 127.0.0.1:6784: connect: connection refused

Normal SandboxChanged 115s (x25 over 2m20s) kubelet Pod sandbox changed, it will be killed and re-created.

모든 pod들이 생성되지 못했다. describe를 통해 pod를 조회해본 결과 Weave Net DaemonSet이 정상 작동하지 않은 것으로 보인다.

Weave Net DaemonSet의 상태를 확인 해보기

1

2

3

4

5

6

7

8

9

root@controlplane ~ ➜ k get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-668d6bf9bc-nvvkp 1/1 Running 0 24m

coredns-668d6bf9bc-srr86 1/1 Running 0 24m

etcd-controlplane 1/1 Running 0 24m

kube-apiserver-controlplane 1/1 Running 0 24m

kube-controller-manager-controlplane 1/1 Running 0 24m

kube-proxy-blgc8 1/1 Running 0 24m

kube-scheduler-controlplane 1/1 Running 0 24m

애초에 Weave Net이 설치되어 있지 않기에 kube-system에 설치를 시도한다.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

curl -L https://github.com/weaveworks/weave/releases/download/latest_release/weave-daemonset-k8s-1.11.yaml

| kubectl apply -f -

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 6183 100 6183 0 0 14846 0 --:--:-- --:--:-- --:--:-- 1567k

serviceaccount/weave-net created

clusterrole.rbac.authorization.k8s.io/weave-net created

clusterrolebinding.rbac.authorization.k8s.io/weave-net created

role.rbac.authorization.k8s.io/weave-net created

rolebinding.rbac.authorization.k8s.io/weave-net created

daemonset.apps/weave-net created

root@controlplane ~ ➜ k get pods -n triton

NAME READY STATUS RESTARTS AGE

mysql 1/1 Running 0 4m27s

webapp-mysql-7bd5857746-x6stl 1/1 Running 0 4m27s

모든 Pod들의 정상 작동을 확인했다.

Case 4-2 kube-proxy가 정상 작동되지 않음

kube-proxy의 pod가 CrashBackOff상태가 되어있다.

1

2

3

4

5

6

7

8

9

10

root@controlplane ~ ➜ k get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-668d6bf9bc-nvvkp 1/1 Running 0 31m

coredns-668d6bf9bc-srr86 1/1 Running 0 31m

etcd-controlplane 1/1 Running 0 31m

kube-apiserver-controlplane 1/1 Running 0 31m

kube-controller-manager-controlplane 1/1 Running 0 31m

kube-proxy-xtgm4 0/1 CrashLoopBackOff 3 (35s ago) 83s

kube-scheduler-controlplane 1/1 Running 0 31m

weave-net-sqdz6 2/2 Running 0 5m47s

pod의 log를 확인

1

2

3

root@controlplane ~ ✖ kubectl logs -n kube-system kube-proxy-xtgm4

E0601 16:13:02.279231 1 run.go:74] "command failed" err="failed complete: open /var/lib/kube-proxy/configuration.conf:

no such file or directory"

/var/lib/kube-proxy/configuration.conf 의 경로에 kube-proxy를 위한 설정 파일이 존재하지 않은 것으로 보인다.

그렇다면 어디에서 이런 설정 파일의 위치를 알 수 있을까?

ConfigMap을 통해 확인할 수 있는 kube-porxy 설정 파일

1

2

3

4

5

6

7

8

root@controlplane /var/lib ✖ k describe configmap kube-proxy -n kube-system

Name: kube-proxy

Namespace: kube-system

...

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

ConfigMap에서는 configuration.conf가 아닌, /var/lib/kube-proxy/kubeconfig.conf가 되어 있다. 즉 pod(DaemonSet)의 설정 파일에 문제가 있다는 것을 확인

k edit을 통해 DaemonSet을 수정

1

2

3

4

5

6

7

root@controlplane /var/lib ➜ k edit ds kube-proxy -n kube-system

...

containers:

- command:

- /usr/local/bin/kube-proxy

- --config=/var/lib/kube-proxy/configuration.conf

- --hostname-override=$(NODE_NAME)

edit 명령어를 통해 적절한 경로로 수정한다. kube-proxy, weave-net(CNI), fluentd(로그 수집)들은 deployment로 관리 되는 것이 아닌 daemonset으로 관리 되어진다.

즉 pod를 edit하는 것은 의미가 없고 상위의 개념인 DaemonSet을 edit 해야하는 것을 명심하자.

kube-proxy의 정상 작동을 확인

1

2

3

4

5

6

7

8

9

10

root@controlplane /var/lib ➜ k get configmap -n kube-system

NAME DATA AGE

coredns 1 57m

extension-apiserver-authentication 6 57m

kube-apiserver-legacy-service-account-token-tracking 1 57m

kube-proxy 2 57m

kube-root-ca.crt 1 57m

kubeadm-config 1 57m

kubelet-config 1 57m

weave-net 0 57m